(Almost) Building Tacklr - an AI phishing protection tool for consumers

The step-by-step process I used to attempt to validate an idea for an AI phishing protection product for consumers.

I’ve recently been spending some time researching markets and ideas for new ventures, and have been going through a very systematic process of attempting to validate the hypotheses behind my most promising ideas.

Combining my experience with a structured approach to validating ideas and finding product-market fit from a recent Reforge course around finding product-market fit, I was able to quickly test an idea and ultimately decide not to pursue it as I was unable to de-risk my riskiest assumptions.

In this post, I’m going to share the exact process I used.

The Idea

During Covid and after, I had seen an increase in articles in the media talking about phishing scams robbing people blind across the world. There are a number of B2B companies attempting to solve this problem for corporations, but consumers have largely been ignored even though in the US alone $3B(!!) is stolen from people over the age of 50 in phishing scams annually. While spam teams at the big free email providers such as Google are working hard to block these attacks, they are still getting through as can be seen by the occasional phishing email landing in my own mailbox and the statistic I just mentioned.

I started my career in IT security and have always wanted to go back to this field to solve some of the bigger problems there. After looking into how big the phishing problem is globally, I thought that there could be an opportunity here in the consumer space for a phishing protection tool that integrates into existing services and workflows.

Hypotheses

I came up with the following hypotheses:

Market need

- With the increasing number of phishing attacks against consumers, especially in countries like the US and the UK, an easy-to-use solution would be well received by the older and less tech-savvy population that are commonly the victims of these attacks.

Technical feasibility

Companies have sprung up over the last few years with advances in AI/ML capacity that use AI models to analyse email content and associated metadata to detect nefarious emails. I believe that I am able to put together a team of AI specialists that could come up with a similar detection method catering to consumers.

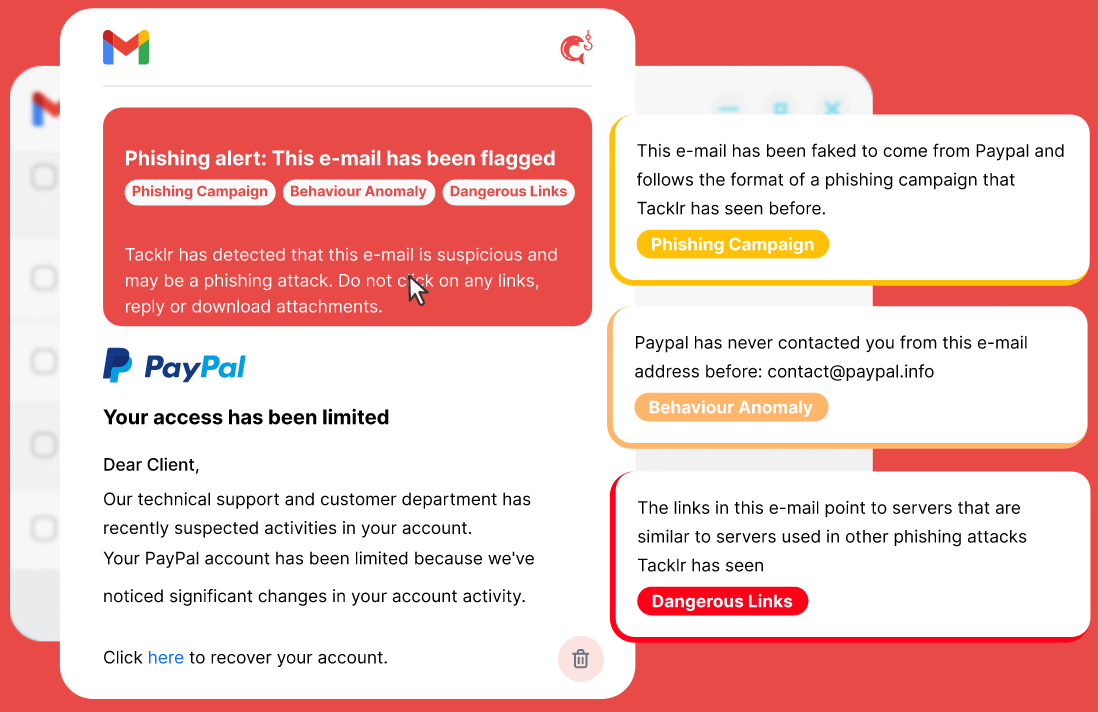

I believe that a browser extension could be a simple and effective way of delivering this service, interacting with Gmail to analyse emails and alert users right within the Gmail UI.

Competition

- Google, among others, do have well-resourced spam & malware teams but has not been able to protect their free users adequately. I lacked a solid hypothesis here and hoped to be able to answer why they have not been able to offer an increased level of protection that might lead me to a hypothesis of why I would be better placed to improve on their capabilities.

Adoption

- While consumers are increasingly concerned about security, tools such as Grammarly (initially being referred to as a ‘keylogger’ in some circles) may have paved the way for add-on services that need access to your data to do their job. As one of the ways Grammarly delivers its service is via a browser plugin on top of Gmail, I believe that the friction to adopt a service that technically works, in the same way, may now be lower.

Willingness to pay

- Again using Grammarly as an example, I believe consumers are getting accustomed to paying for tools that integrate into the existing services they use to add additional functionality to them. Gmass and Streak are other examples of this that add functionality to Gmail.

ICP

- While more tech-savvy individuals are generally not worried enough about being fooled by phishing emails, they might be interested in a solution that protects members of their family that aren’t as tech savvy. Older people that are likely less confident in being able to detect nefarious emails, but are used to purchasing services online, could be interested in a product of this sort. I believe that these could be two valid customer segments.

There were other hypotheses around acquisition and possible growth loops, however, I put these into a second bucket that I would have tested further if I was able to de-risk these initial, most critical ones.

Validating Riskiest Hypotheses

Taking the above list, I prioritised and ordered the list by risk level:

Technical feasibility

Competition

Market interest & willingness to pay

1. Technical feasibility

I met with some of the leading AI/ML experts here in Spain to figure out what it would take to come up with an MVP for this idea. Armorblox is a US-based company that recently released a similar product for the enterprise, which we used as an example to model our MVP after.

We came to the conclusion that a similar detection mechanism is feasible with our resources. Delivery of the service would be through a browser extension that sends incoming emails to our AI model to detect whether it is a phishing attack or not.

The additional requirement that became clear fairly quickly here was the need for a LARGE corpus of phishing emails to train the models. Public datasets were not available, the few datasets that I did come across were being guarded by academic institutions to be given out only to academics, much to my dismay. In the end, I did manage to find an organisation that had a large number of phishing emails where a bilateral data-sharing agreement was possible.

The last technical hurdle that presented itself was that Google now requires security audits of all browser extensions that wish to use the Gmail API which according to their documentation would cost between $15,000 and $75,000. While I appreciate Google’s need to be cautious here, the prices seemed outrageous for the small extension that I wanted to test this idea with.

Having made a quick list of a handful of extensions that appear to use the Gmail API, I was able to get on the phone with some of these developers to understand how this audit process went for them and the associated costs. I was reassured that the price range stated in the documentation was on the higher side and that with a small extension like what I had in mind, the price would be significantly lower.

This now de-risked this hypothesis: it is technically feasible for me to get an AI model built to detect phishing emails, that will be trained with hundreds of thousands of real phishing emails, and delivered as an MVP via a browser extension that, after a successful audit, will be interfacing with the Gmail API to do what it needs to.

2. Competition

I could not find any serious competitors that were targeting the same problem for consumers: always a worrisome sign. There were some intents in the past, by established AV vendors as well as a company that used a crowd-sourced detection model but failed due to not finding the right business model (selling user’s browsing data without them knowing generally isn’t the smartest way to go about things!). In short, it seemed that a technically advanced, paid-for, phishing protection solution for consumers currently does not exist. Huge opportunity, or a massive red flag.

That leaves me with Google and the rest of the free email provider behemoths. I came across various posts made by their spam team on how they have incorporated AI filters and are now stopping 99.9% of all malicious emails from reaching users’ mailboxes. Yet, phishing scams targeting people using these services were still on the rise, and just that week I received what looked like a simple phishing email in my Gmail account. Is it really that difficult to detect some of these seemingly simple scams? Is it a cost issue? Would more sensitive filters increase the false positive rate to one unacceptable by Google? Or is Google working under other constraints?

I tried reaching out to more than a handful of people at Google, people that know someone at Google, people that used to work at Google, all to understand why this was such a difficult problem to fix and if there was space for a third party to come in and make their users more secure. I was not able to get any more information from any of these people.

This hypothesis remains unvalidated and severely risky.

3. Market Interest & Willingness to pay

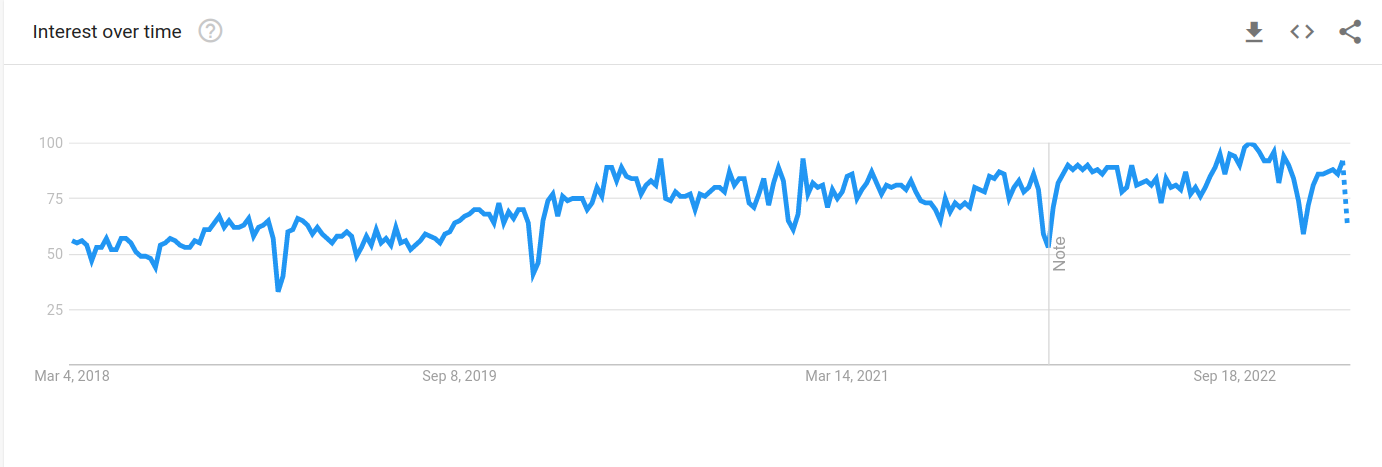

While the increase in phishing scams across the world is worrying, this does not necessarily translate into an interest for people to actively search for a solution. While Google search volumes for phishing have risen steadily in the last 5 years, more specific keywords related to consumer phishing protection were few and far between with near-insignificant volumes. SEO would probably be a difficult acquisition channel for this product - note taken.

Google Trends report for the keyword ‘phishing’ spanning the last 5 years

SEO would not have been a channel I could have used to test market interest anyway due to the time it takes to build up. PPC is more apt for quickly testing the value proposition, the market interest, as well as sourcing candidates for more in-depth customer development interviews later.

After some sketching, I found an animator on Upwork that turned my sketches into a flashy animation that showed how I initially envisioned the product to work (Substack does not support animations so you’ll have to go to the landing page here to see it).

I embedded this on a landing page using Webflow (that will still be accessible for a while here), a logo created with AI from Brandmark, a CTA and a form to capture email addresses. Once the email was filled in, a survey would be shown with a number of questions to better understand the lead and their motivation.

The questions I used in the survey (for which I used involve.me) were to understand the following things about the user:

How tech-savvy are they?

What is their risk perception around phishing? How confident are they of their own, and their family’s, capacity to detect phishing attacks.

Do they currently have any phishing protection, or AV software, that they are paying for? Are they accustomed to paying for security software?

Willingness to pay using the Van Westendorp pricing model

Once that was set up, I now needed to get some traffic. I decided to use the following ad platforms:

Facebook Ads

Google Adwords

Reddit

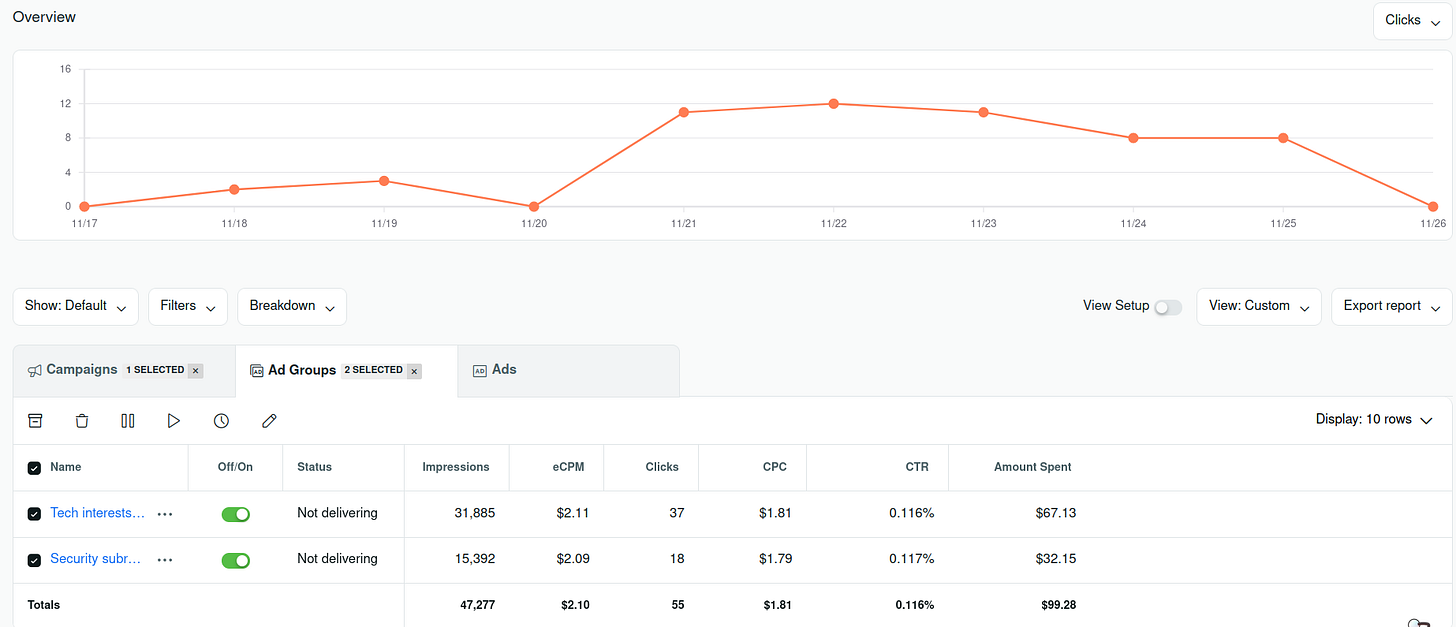

Using the same animation, I created a number of ad sets tied to variations on my value proposition on each of these platforms. I used the two customer segments that I had initially hypothesised, and set them to run for a week. Reddit allowed me to choose subreddits, so I chose to also target several tech & security based subreddits.

I spent a little over $300 during this time, which gave me a significant number of impressions, but a miserable conversion rate: my value proposition was not landing. This resulted in only 3 signups by the end of the week. While I’m sure that the ads and the targeting could have been improved further, the probability that these lackluster results were largely due to low market interest in what I was offering (at least on the channels I ran these tests on) was high.

Miserable CTR on Reddit

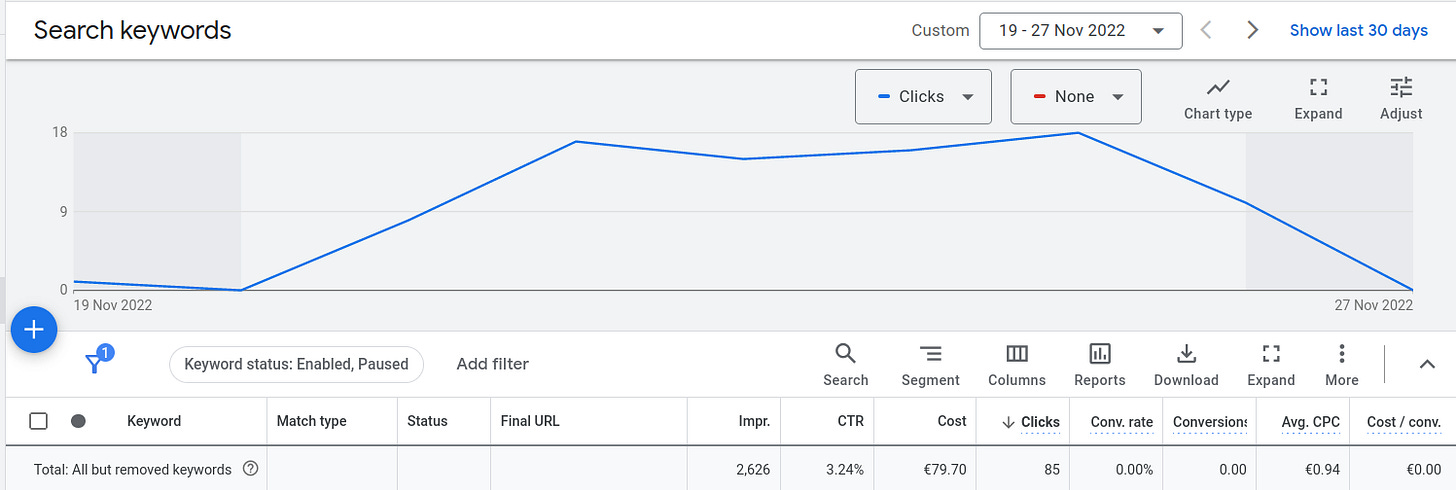

The CTR for Adwords was actually a decent 3.24% however the keywords that were driving these clicks were quite generic (“how to stop phishing”, “how to stop scam mails”, “phishing email security”) and the search intent here was probably informational, people not looking for a product, or people searching for a corporate solution. While I am guessing here, the click-rate on my CTAs clearly showed that my value proposition was not landing.

Acceptable CTR on Adwords but these visitors were looking for something else

Apart from testing these hypotheses through online ads, I also interviewed a number of people that fit my hypothesised customer profiles. The results here were disheartening as well: everyone agreed that this was a problem, however the degree of risk perception wasn't as high as I imagined as many people assume they can spot these emails themselves, and the older less tech savvy people are not accustomed to buying services online as ironically enough they are worried about being scammed.

While the younger tech savvy people did see this as a solution they could potentially purchase for their family members (the other customer segment), none of them had been worried enough about this to actively have gone out and search for a solution before - the assumed behaviour of buying software for your family members, security or otherwise, does not seem to be prevalent across the people I spoke with.

Since I wasn’t able to identify any significant market interest in the solution, I did not have a suitably sized segment of interested individuals to test their willingness to pay.

This hypothesis remains unvalidated and risky.

Conclusion

Two out of the three riskiest hypotheses could not be validated in the time and resources I had aimed to dedicate to this research and resulted in me scrapping the project.

Under no circumstances was I willing to take a bet that might result in me directly competing against Google on their own platform and product, and my minimal customer acquisition test with the value proposition I had envisioned returned abysmal results. If there was enough interest in the solution, but the willingness to pay was extremely low (as it usually is with consumer products of this type), I may have been tempted to continue down this path to see what other options were available to increase the ACV over time (additional security features) or other business models (pivoting to B2B or monetizing the corpus of phishing emails that I would accumulate).

It is possible that a different set of acquisition channels could have better results to reach the types of customers that would be interested in such a product (selling to organisations that are in charge of protecting computers of non-tech savvy consumers, such as schools, security awareness training organisations and more), however these are not channels that I am familiar with, or even want to build something on.

I am by no means saying there is no business opportunity here, and maybe someone better versed at selling to the consumer than I am would see this differently, but for me these initial tests proved that there is too much risk here for me to go any further.

Note: If anyone reading this interested in picking up this project, get in touch and I will be happy to share all the data and contacts that I have collected for this research.